Hey dude...!

I Am back..😉😉🤘

I am Nataraajhu

I'll tell you some knowledge share about BUILD LOCAL AI SERVERS (or) COMPUTER

These things are all about AI Computers🚨🚨

👀👀 In my world of blogging, every link is a bridge to the perfect destination of knowledge................!

We already know about computers and CPU & GPU and Mouse and Keyboards, but the new 21st Century Era is different because of AI here. AI wants to have high computational power and thermal management. So everyone builds their own AI server (or) Workstations (or) Double and Thrible GPU are used in the CPU. In this blog, I'll explain what exactly these are..

Today, I have cleared some Doubts...

Why does Everyone Run LLM & GenAI, & Other Models Locally?

Main Factors are....

1- Speed🏃: Lower latency, faster responses.

2- Privacy🌏: No data sent to external servers.

3- Cost💰: Avoids cloud fees.

4- Control🛂: Full customization and offline access.

5- Scalability🙋: No API limits or usage caps.

The Computation Power Requirements come from here; That is AI

AI workloads are divided into two major phases:

1️⃣ Training – Learning from data (building the model)

2️⃣ Inference – Using the trained model for predictions

Learning from data and using the trained model for predictions depends on the GPUs' VRAM and Memory Bandwidth..........

So What Exactly is VRAM and Memory Bandwidth?

VRAM (Video RAM)

What: Special memory on the GPU used to store textures, models, AI tensors, etc.

Why: Keeps data close to the GPU cores for fast access.

More VRAM = bigger models or higher-resolution tasks (e.g., 3D, AI, 4K gaming).

Memory Bandwidth

What: Speed at which data moves between GPU and VRAM (measured in GB/s).

Why: Higher bandwidth = faster model training/inference.

Influenced by memory type (e.g., GDDR6, HBM3) and bus width (e.g., 256-bit, 512-bit).

✅ Tips:

1- Use mixed precision (FP16) to reduce VRAM usage by ~50%.

2- If low on VRAM, try gradient checkpointing or a smaller batch size.

So Now We Are Going to The Hardware Session:

Don't confuse 😕😕

What is Workstation VS Normal Pc VS AI Cluster?

-

Normal PC:

This is a standard personal computer designed for everyday tasks like web browsing, office productivity, media consumption, and gaming. It has sufficient performance for daily use, but it isn’t built with specialized components for heavy-duty, professional workloads.

-

Workstation:

A workstation is a high-performance PC engineered for professional and compute-intensive tasks such as 3D rendering, video editing, CAD, and scientific simulations. They often feature more powerful CPUs and GPUs, ECC (error-correcting) memory for enhanced reliability, better cooling, and they are certified for professional software.

-

AI Server (Middle one):

An AI cluster is a networked group of high-performance servers (nodes) designed to work together on large-scale AI and machine-learning tasks. These clusters incorporate many specialized GPUs or AI accelerators, high-speed interconnects, and distributed processing software, enabling them to handle massive data sets and complex computations that a single workstation or PC cannot manage.

What is a Workstation Motherboard vs a Normal Motherboard?

CPU Socket and Chipset

- Workstation:

Often uses chipsets that support higher-end CPUs (or even dual-CPU configurations), offering extra PCIe lanes and enhanced power delivery for stability under continuous heavy loads. They may support ECC (Error-Correcting Code) memory, which increases reliability in critical applications. - Normal PC:

Uses mainstream chipsets supporting popular consumer CPUs with sufficient performance for daily tasks. ECC memory support is usually absent, as it isn’t necessary for typical consumer workloads.

Memory (DIMM Slots)

- Workstation:

Typically includes more DIMM slots, supports higher memory capacities, and may offer ECC memory for data integrity. This is vital for applications like 3D rendering, scientific simulations, or video editing. - Normal PC:

Comes with fewer memory slots and is designed for standard RAM capacities without ECC, which is generally adequate for daily use and gaming.

Expansion Slots (PCIe)

- Workstation:

Often provides multiple full-length PCIe x16 slots (sometimes with advanced bifurcation options) to support dual GPUs, specialized accelerator cards, or high-speed storage controllers. These boards are built with reinforced slots and robust cooling solutions to handle continuous heavy workloads. - Normal PC:

Usually offers one or two PCIe x16 slots with limited lane splitting. They are geared toward one GPU and occasional add-in cards like Wi-Fi adapters or sound cards.

Power Delivery and VRM Design

- Workstation:

Features a more robust VRM (Voltage Regulator Module) design with higher phase counts and better cooling to ensure consistent power under prolonged heavy processing. This is essential for stability during intensive tasks. - Normal PC:

Has a standard VRM design that balances performance and cost, sufficient for typical consumer usage but not over-engineered for nonstop high-load scenarios.

Storage Connectivity

- Workstation:

Often includes additional M.2 slots, multiple SATA ports, and sometimes support for advanced RAID configurations to provide fast and redundant storage solutions for large data sets. - Normal PC:

Provides enough storage connectivity for everyday applications, but might have fewer options compared to workstation boards.

Networking and I/O

- Workstation:

May include dual or even quad Ethernet ports (with support for 10 GbE), advanced Wi-Fi, and extra USB ports for high-speed connectivity. These boards often have features like remote management or additional security options. - Normal PC:

Typically comes with standard Gigabit Ethernet and a mix of USB ports sufficient for daily peripherals, without the extra networking or management features.

Additional Features

- Workstation:

Often certified for stability and performance with professional software (ISV certifications) and designed for 24/7 operation. They may also include enhanced onboard audio, dedicated diagnostic LEDs, and better overall build quality to minimize downtime. - Normal PC:

Focuses more on aesthetics and cost-effectiveness, providing the features most consumers need without the additional professional-grade extras.

Why will AMD CPU be better than Intel in 2025?

As of 2025, AMD CPUs have gained a strong edge over Intel in many areas—especially in AI servers, high-performance computing (HPC), data centers, and cost-effective scaling. Here's why AMD is considered better than Intel in 2025:

🔥 Top Reasons AMD CPUs Are Winning Over Intel (2025)

✅ 1. Zen 4 / Zen 5 EPYC Leadership (e.g., Genoa, Bergamo, Siena, Turin)

- Higher core counts: AMD EPYC 9004/8004 series CPUs can scale up to 128 or 192 cores (EPYC 9754, 9965).

- Better multi-threading and energy efficiency than Intel Xeon Scalable processors (Sapphire Rapids).

- Superior performance per watt, especially in AI and multi-socket configurations.

✅ 2. Advanced Node Manufacturing (TSMC 5nm/4nm)

- AMD’s chips are built using TSMC's advanced 5nm and 4nm nodes, giving them:

- Better performance

- Lower heat output

- Higher density

🆚 Intel's process delays have continued, with most Xeon CPUs still using Intel 7 (10nm) or transitioning slowly to Intel 3/20A.

✅ 3. PCIe 5.0 and DDR5 Leadership

- AMD supported PCIe 5.0 and DDR5 memory ahead of Intel in many platforms.

- Critical for high-speed GPUs, NVMe storage, and data-intensive workloads like AI training.

✅ 4. Platform Flexibility (SP5 Socket & Genoa-Compatible Servers)

- More lanes: AMD EPYC CPUs support 128–160 PCIe lanes.

- Better for GPU-dense servers like Supermicro AS-5126GS-TNRT2, which use AMD CPUs with NVIDIA GPUs (H100 SXM).

- Seamless multi-GPU and NVMe scaling without bottlenecks.

✅ 5. AI & Data Center Dominance

- AMD is now the CPU of choice in many AI-focused servers, thanks to:

- High core density

- Broad IO support

- Lower total cost of ownership (TCO)

- AMD CPUs complement NVIDIA GPUs better in training/inference workloads.

✅ 6. Lower Power Consumption

- Efficiency-first architecture (more performance per watt than Intel).

- Important for edge deployments and green AI data centers.

✅ 7. Better Price-to-Performance Ratio

- For the same price, AMD often offers more cores, better cache, and superior performance.

- Makes AMD the go-to in cloud infrastructure, on-prem AI clusters, and startups scaling AI models.

🏆 Real-World Adoption

- Supermicro, Dell, HPE, and Lenovo all ship AI servers with AMD EPYC CPUs.

- AMD CPUs are used in NVIDIA H100/H200 GPU-based servers.

- Many cloud providers (Azure, Oracle, Google Cloud) now offer EPYC-powered VMs for AI/LLM workloads.

🔮 Summary: Why AMD is Better than Intel in 2025

| Benefit | AMD CPU | Intel CPU |

|---|---|---|

| 🧠 Core Density | ✅ Yes | ❌ No |

| ⚡ Power Efficiency | ✅ Yes | ❌ No |

| 🧩 Platform Scalability | ✅ Yes | ⚠️ Limited |

| 💰 Value for Money | ✅ Yes | ❌ No |

| 🧠 AI Server Support | ✅ Yes | ⚠️ Some |

Example of Comparing Two High-End CPU Processors👇

AMD EPYC CPU vs Intel Xeon

- AMD EPYC 9015: 32 cores / 64 threads (Zen 4/Zen 4c)

- Intel Xeon (Sapphire Rapids / Emerald Rapids): Fewer cores per socket.

- Why It Matters: AI workloads require parallel processing, and AMD's higher core counts perform better in AI inferencing & training.

2️⃣ Higher Memory Bandwidth & DDR5 Support

- AMD EPYC 9004: 12-channel DDR5 (up to 6TB RAM)

- Intel Xeon: 8-channel DDR5

- Why It Matters: More memory channels = faster AI model training & inference due to quicker data access.

3️⃣ PCIe 5.0 & CXL for Faster GPU & Accelerator Connectivity

- AMD EPYC 9004: 128-160 PCIe 5.0 lanes

- Intel Xeon: Only up to 80 PCIe 5.0 lanes

- Why It Matters: AI needs fast interconnects for GPUs (NVIDIA H100, AMD MI300X), storage, and networking. More lanes = better scalability.

4️⃣ Better Power Efficiency (More Performance per Watt)

- AMD EPYC 9004: Uses chiplet architecture → better efficiency.

- Intel Xeon: Monolithic design → more power-hungry at higher core counts.

- Why It Matters: AI training & inference runs 24/7. Lower power usage reduces cooling costs & power bills.

5️⃣ Optimized for AI & HPC Workloads

- AMD EPYC 9004: Supports AVX-512, VNNI, and BFLOAT16 for AI acceleration.

- Intel Xeon: Also has AVX-512, but AMD's implementation is more efficient with its architecture.

Why will NVIDIA GPUs be better than AMD & Intel in 2025?

I chose Nvidia✋

✅ 1. Superior AI Ecosystem (CUDA + TensorRT + cuDNN)

- CUDA: NVIDIA's proprietary GPU programming platform is mature and widely adopted in research and production.

- TensorRT: Highly optimized for AI inference.

- cuDNN: Acceleration for deep learning primitives.

- 🔁 No direct alternatives from AMD (ROCm still growing) or Intel (oneAPI newer).

✅ 2. Dominance in AI Hardware (e.g., H100, H200, L40S)

- H100 & H200 (Hopper architecture) are optimized for massive training and inference of LLMs.

- SXM versions offer NVLink high-speed interconnect, unmatched in AMD/Intel GPUs.

- AMD’s MI300X is promising but lacks software support and market presence.

- Intel’s Ponte Vecchio is impressive but mainly used in government/supercomputing.

✅ 3. Best Multi-GPU Scaling (NVLink, NVSwitch)

- NVIDIA NVLink/NVSwitch enables fast GPU-GPU communication (essential for LLMs & vision transformers).

- AMD uses Infinity Fabric, and Intel has Xe Link, but both are behind in ecosystem and scalability.

✅ 4. Developer & Community Support

- Most AI frameworks (PyTorch, TensorFlow, JAX) are optimized first for NVIDIA.

- Tons of open-source AI models come with NVIDIA-ready pretrained checkpoints.

- StackOverflow, GitHub, HuggingFace: massive NVIDIA-first projects.

✅ 5. NVIDIA Enterprise & Cloud Integration

- Major cloud providers (AWS, Azure, GCP) heavily use NVIDIA GPUs for AI workloads.

- DGX servers, Grace Hopper Superchips, and Omniverse/AI Foundry tools offer end-to-end NVIDIA-native infrastructure.

✅ 6. Industry Adoption

- Used by OpenAI, Meta, Google DeepMind, Tesla, Waymo, and most LLM developers.

- NVIDIA’s GPUs power the majority of autonomous vehicle stacks, robotics, and computer vision solutions.

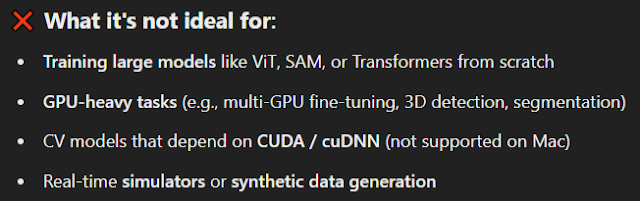

Think About Mac Studios for AI?

I chose the Supermicro AS-5126GS-TNRT2 Server:

Without any Limits: How to Choose the Best AI Server?

1- Supported GPU Types in AS-5126GS-TNRT2?

8x NVIDIA L40S (Ada Lovelace Architecture) – AI Inference & Graphics

8x NVIDIA RTX 6000 ADA – High-End Workstation GPU

8x H100 PCIe or 8x H200 PCIe GPUs (Heavy Training Purpose)

8x A100 PCIe

2- Supported Motherboard in AS-5126GS-TNRT2?The Supermicro AS-5126GS-TNRT2 server system is built on the Supermicro H13SST-GS motherboard.

3- Supported RAM in AS-5126GS-TNRT2?

The Supermicro AS-5126GS-TNRT2 can potentially support 1PB (petabyte) or higher storage, depending on the drive configuration and external storage solutions.

Internal Storage (Up to 8 Drives)

- 8x U.2 NVMe SSDs (30.72TB each) → ≈ 245TB total.

- 8x SATA/SAS HDDs (24TB each) → ≈ 192TB total.

- choose: U.2 NVMe, M.2 NVMe, SAN

So, while internal storage alone might not reach 1-PB, adding external JBODs, NAS, or SAN solutions can easily exceed petabyte-level storage. 🚀

7- Power Supply AS-5126GS-TNRT2?

8- What is the Cooling System of AS-5126GS-TNRT2?

Last One (Very Important one)

10- 🏠 Home Use Considerations

| Criteria | 3× RTX 4090 PC or 5090 | Supermicro AS-5126GS-TNRT2 |

|---|---|---|

| 🔌 Power Efficiency | ✅ More efficient per GPU | ❌ Heavy consumption |

| 🔊 Noise Level | ⚠️ High (but manageable) | 🔊 LOUD (jet-engine fans) |

| 🌡️ Cooling Requirements | ⚠️ Needs airflow/AC | ❌ Requires server-grade cooling or cold room |

| 📶 Setup Complexity | ✅ Simple DIY PC | ❌ Enterprise-level setup |

| 🏘️ Home Power Limit | ✅ Standard homes handle | ❌ May trip breakers or require 32A+ |

| 💡 Daily Power Bill | ₹200–300+ (for hours use) | ₹700–1000+ (if running full load) |

| 👨🔧 Maintainability | ✅ Easy to manage | ❌ Needs experience |

| 🎮 Gaming Friendly | ✅ Perfect | ❌ Not ideal (no HDMI/display outputs) |

| 🧠 Multi-GPU AI Ready | ✅ 3 GPUs okay for most | ✅ Beast mode (8 GPUs) |

| 💰 Cost | ₹6–8 lakhs (assumption) | ₹30–60 lakhs+ (with GPUs) |

ABOUT COST

So, Finally, Conclusion:

"GPUs are the engines of tomorrow—powering the future of intelligence, innovation, and immersive experiences."

MUST-WATCHABLE ELEMENTS:

1- How to Use All Mac Studios Cluster: Link

2- List of best build DOUBLE (or) 2 GPU Computer Component: Link

3- List of best build THREE (or) 3 GPU Computer Components: Link

4- X AI Supercluster: Link

5- LLM GPU's Computation Power Real-Time Calculator: Link

6- ML Commons: Link

7- LMSTUDIO: Link

Inside AQ:

PCIe, SXM, Non-SXM, and ECC Slots: Understanding GPU and Memory Interfaces

When dealing with high-performance AI and autonomous vehicle workloads, understanding the differences between PCIe, SXM, Non-SXM, and ECC memory slots is crucial for optimizing your Supermicro AS-5126GS-TNRT2 AI server with AMD and NVIDIA GPUs.

1. PCIe (Peripheral Component Interconnect Express) Slots

-

Definition: PCIe is a high-speed interface standard used for connecting GPUs, SSDs, and other expansion cards to the motherboard.

-

Usage: PCIe slots are the most common for consumer and enterprise GPUs.

-

Versions: PCIe 3.0, 4.0, 5.0, and upcoming 6.0 (higher versions = more bandwidth).

-

Lanes (x1, x4, x8, x16): Determines bandwidth (e.g., PCIe 5.0 x16 = 128GB/s).

-

AV Use Case: Used in standard NVIDIA RTX/AMD Radeon GPUs for AI training/inference.

-

Example GPUs: NVIDIA RTX 4090, A100 PCIe, AMD MI210.

2. SXM (Scalable Matrix eXtension) Slots

-

Definition: SXM is a high-bandwidth, power-efficient socket designed by NVIDIA for data center GPUs.

-

Usage: Found in NVIDIA’s high-end AI GPUs (H100, A100, V100) and used in HGX-based servers.

-

Advantages Over PCIe:

-

Higher Memory Bandwidth: SXM GPUs use NVLink for direct high-speed communication.

-

More Power Efficient: Supports 700W+ GPUs, unlike PCIe’s 350W limit.

-

Better Multi-GPU Scaling: NVSwitch allows 8+ SXM GPUs to share memory.

-

-

AV Use Case: Best for deep learning training, large-scale AI models.

-

Example GPUs: NVIDIA H100 SXM, A100 SXM, V100 SXM.

3. Non-SXM Slots

-

Definition: Refers to GPU interfaces that are not SXM, including PCIe and other proprietary slots.

-

Includes: PCIe GPUs, custom mezzanine GPUs (like AMD MI250), or Intel’s Xe GPU form factors.

-

AV Use Case: Good for smaller AI models and inference workloads.

-

Example GPUs: AMD Instinct MI210, NVIDIA A100 PCIe.

4. ECC (Error-Correcting Code) Memory Slots

-

Definition: ECC RAM is designed to detect and correct memory errors that could cause crashes or corrupted data.

-

Usage: Found in servers, workstations, and AI servers like your Supermicro.

-

Benefits:

-

Prevents bit-flip errors from cosmic rays or electrical interference.

-

Essential for AI training, autonomous vehicle computing, and mission-critical applications.

-

-

AV Use Case: Used in AI model training and real-time processing.

-

Example: NVIDIA A100 SXM (uses HBM2e with ECC), AMD Instinct MI250 (HBM2e with ECC).

Other types are:

-

Air cooling: Uses fans + heatsink

-

Liquid cooling: Uses water blocks + pump + radiator

-

Hybrid: Combines air and liquid

LAST WORDS:-

One thing to keep in mind is that AI and self-driving Car technologies are very vast...! Don't compare yourself to others. You can keep learning..........

Competition And Innovation Are Always happening...!

So you should be really comfortable with the change...

So keep slowly learning step by step and implement, be motivated and persistent

I hope you really learn something from This Blog

Bye....!

BE MY FRIEND🥂

.png)

0 Comments

If you have any doubts; Please let me know